Difference between revisions of "Principal Component Analysis"

Jump to navigation

Jump to search

Kevin Dunn (talk | contribs) m |

Kevin Dunn (talk | contribs) m |

||

| Line 1: | Line 1: | ||

{{ | {{ClassSidebarYouTube | ||

| date = 16, 23, 30 September 2011 | | date = 16, 23, 30 September 2011 | ||

| vimeoID1 = | | vimeoID1 = 9QzNOz_7i6U | ||

| vimeoID2 = | | vimeoID2 = qDiPZp-FWc4 | ||

| vimeoID3 = | | vimeoID3 = y0Alf0VZ-1E | ||

| vimeoID4 = | | vimeoID4 = XfH_p1WAydM | ||

| vimeoID5 = | | vimeoID5 = QLB-UJ1dFiE | ||

| vimeoID6 = | | vimeoID6 = bysqF41Mgc0 | ||

| vimeoID7 = | | vimeoID7 = p3i-XsviARM | ||

| vimeoID8 = | | vimeoID8 = Qb28yc3eM0Q | ||

| vimeoID9 = | | vimeoID9 = | ||

| course_notes_PDF = | | course_notes_PDF = | ||

Revision as of 20:39, 2 January 2017

| Class date(s): | 16, 23, 30 September 2011 | ||||

| |||||

| |||||

| |||||

| |||||

| |||||

| |||||

| |||||

| |||||

Class 2

<pdfreflow> class_date = 16 September 2011 [1.65 Mb] button_label = Create my projector slides! show_page_layout = 1 show_frame_option = 1 pdf_file = lvm-class-2.pdf </pdfreflow> or you may download the class slides directly.

- Download these 3 CSV files and bring them on your computer:

- Peas dataset: https://openmv.net/info/peas

- Food texture dataset: https://openmv.net/info/food-texture

- Food consumption dataset: https://openmv.net/info/food-consumption

Background reading

- Reading for class 2

- Linear algebra topics you should be familiar with before class 2:

- matrix multiplication

- that matrix multiplication of a vector by a matrix is a transformation from one coordinate system to another (we will review this in class)

- linear combinations (read the first section of that website: we will review this in class)

- the dot product of 2 vectors, and that they are related by the cosine of the angle between them (see the geometric interpretation section)

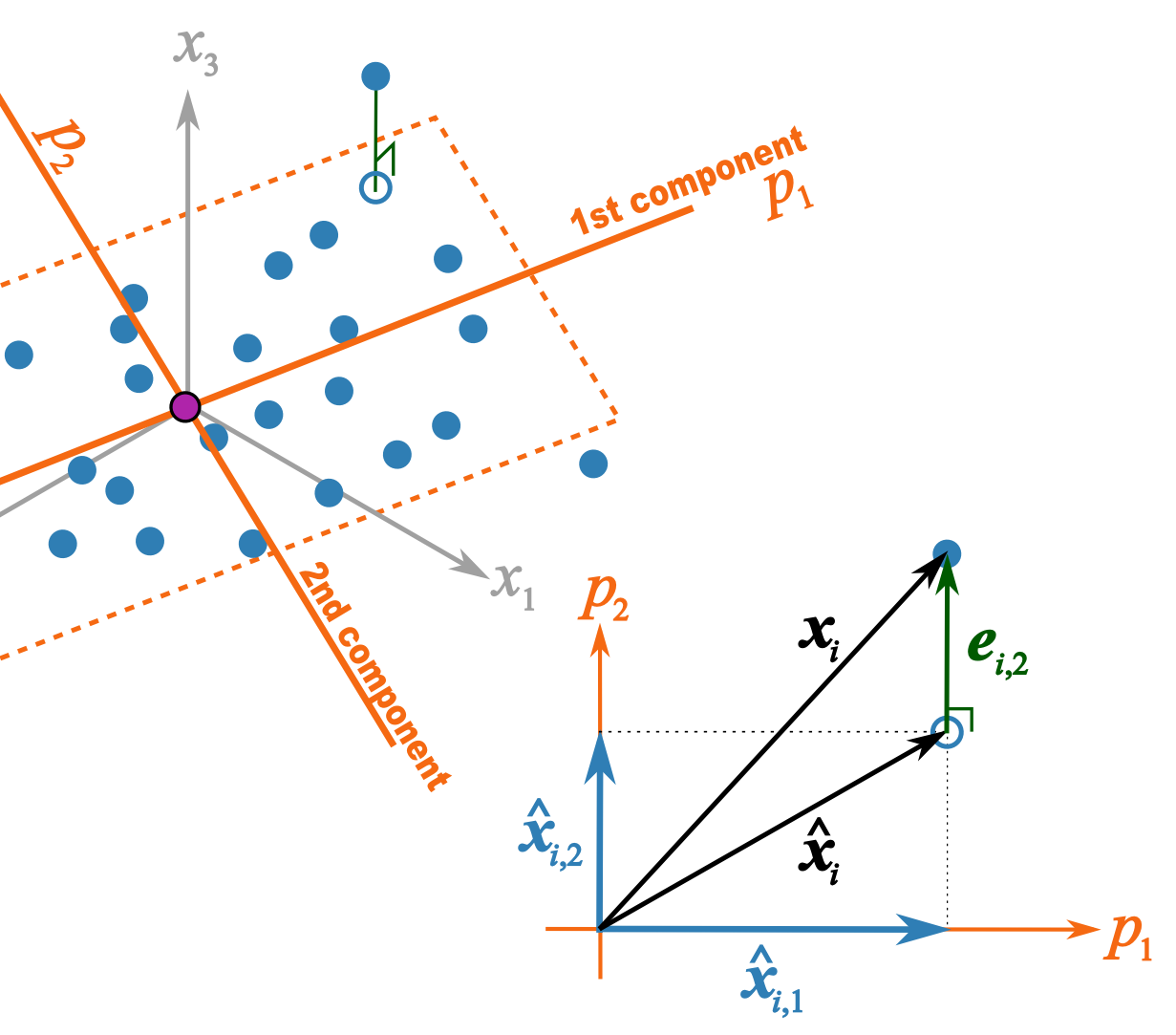

This illustration should help better explain what I trying to get across in class 2B

Class 3

<pdfreflow> class_date = 23, 30 September 2011 [580 Kb] button_label = Create my projector slides! show_page_layout = 1 show_frame_option = 1 pdf_file = lvm-class-3.pdf </pdfreflow> or you may download the class slides directly.

Background reading

- Least squares:

- what is the objective function of least squares

- how to calculate the regression coefficient

- understand that the residuals in least squares are orthogonal to

- Some optimization theory:

- How an optimization problem is written with equality constraints

- The Lagrange multiplier principle for solving simple, equality constrained optimization problems.

Background reading

- Reading on cross validation