4.7. Least squares model analysis¶

Once we have fitted the \(b_0\) and \(b_1\) terms using the data and the equations from the prior section, it is of interest to know how well the model performed. That is what this section is about. In particular:

Analysis of variance: breaking down the data’s variability into components

Confidence intervals for the model coefficients, \(b_0\) and \(b_1\)

Prediction error estimates for the \(y\)-variable

We will also take a look at the interpretation of the software output.

In order to perform the second part we need to make a few assumptions about the data, and if the data follow those assumptions, then we can derive confidence intervals for the model parameters.

4.7.1. The variance breakdown¶

Recall that variability is what makes our data interesting. Without variance (i.e. just flat lines) we would have nothing to do. The analysis of variance is just a tool to show how much variability in the \(y\)-variable is explained by:

Doing nothing (no model: this implies \(\hat{y} = \overline{y}\))

The model (\(\hat{y}_i = b_0 + b_1 x_i\))

How much variance is left over in the errors, \(e_i\)

These 3 components must add up to the total variance we started with. By definition, the variance is computed about a mean, so the variance of no model (i.e. the “doing nothing” case) is zero. So the total variance in vector \(y\) is just the sum of the other two variances: the model’s variance, and the error variance. We show this next.

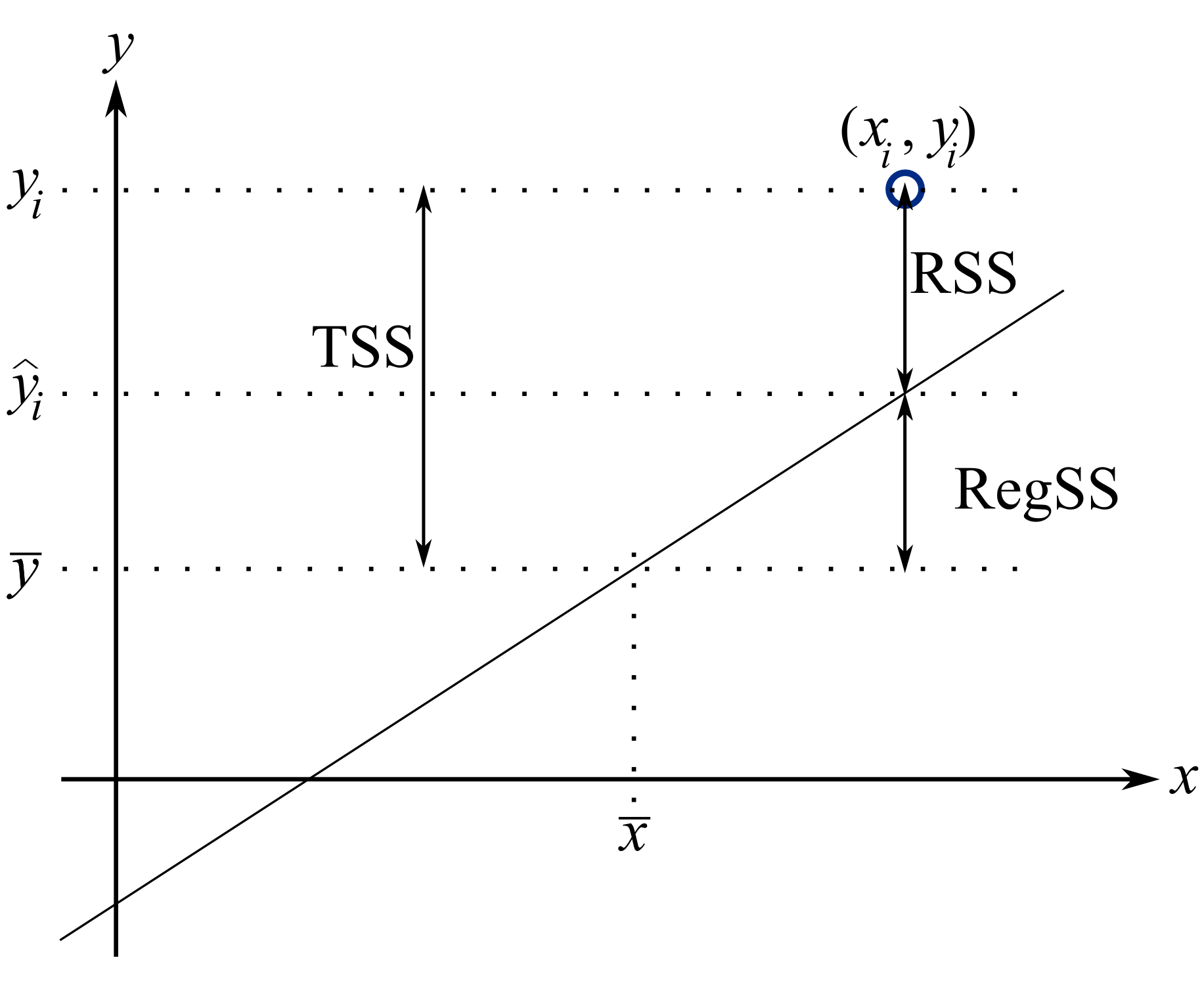

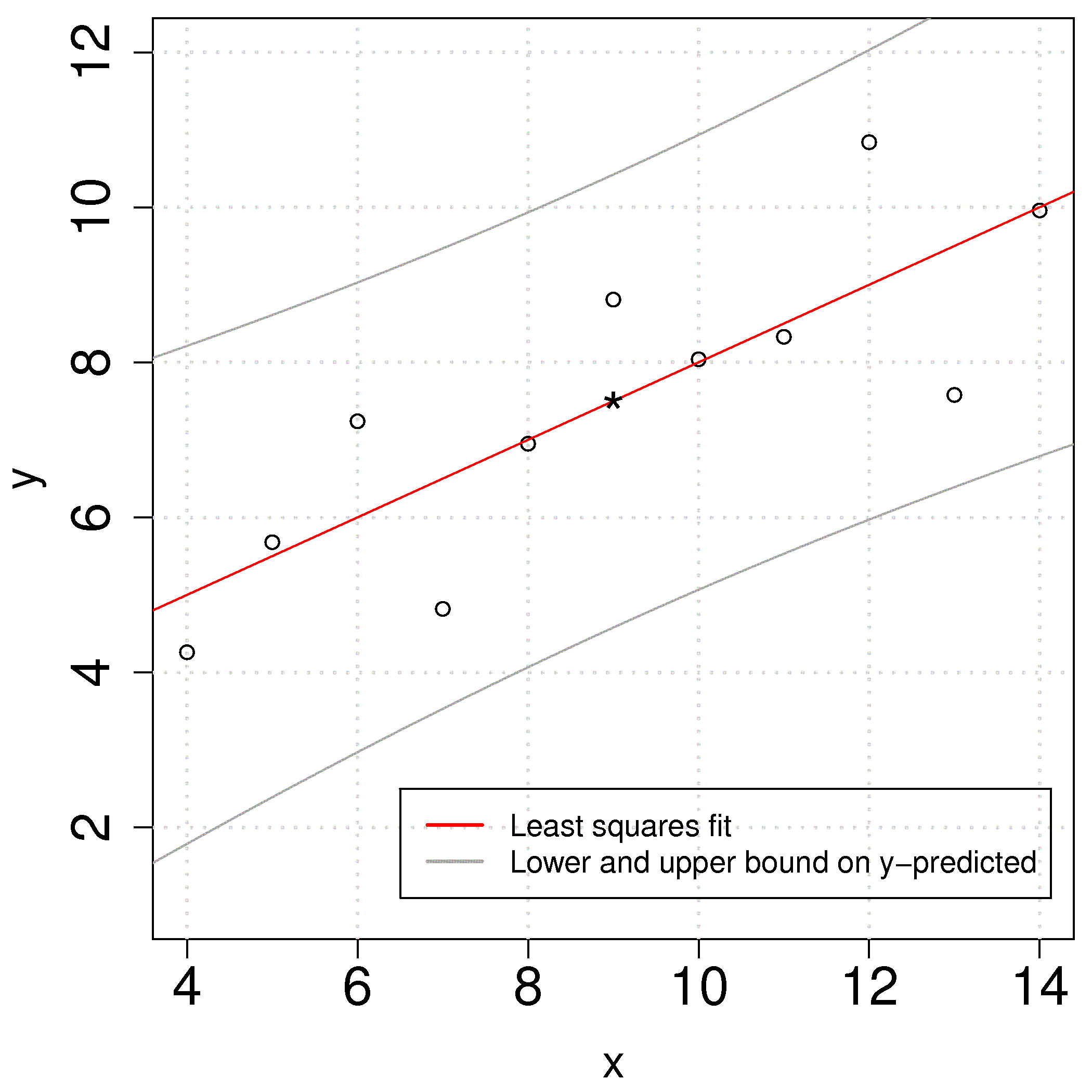

Using the accompanying figure, we see that geometrically, at any fixed value of \(x_i\), that any \(y\) value above or below the least squares line, call it \(y_i\) and shown with a circle, must obey the distance relationship:

The total sum of squares (TSS) is the total variance in the vector of \(y\)-data. This broken down into two components: the sum of squares due to regression, \(\sum \left(\hat{y}_i - \overline{y}\right)^2\), called RegSS, and the sum of squares of the residuals (RSS), \(\sum e_i^2 = e^T e\).

It is convenient to write these sums of squares (variances) in table form, called an Analysis of Variance (ANOVA) table:

Type of variance

Distance

Degrees of freedom

SSQ

Mean square

Regression

\(\hat{y}_i - \overline{y}\)

\(k\) (\(k=2\) in the examples so far)

RegSS

\(\text{RegSS}/k\)

Error

\(y_i - \hat{y}_i\)

\(n-k\)

RSS

\(\text{RSS}/(n-k)\)

Total

\(y_i - \overline{y}\)

\(n\)

TSS

\(\text{TSS}/n\)

4.7.1.1. Interpreting the standard error¶

The term \(S_E^2 = \text{RSS}/(n-k)\) is one way of quantifying the model’s performance. The value \(S_E = \sqrt{\text{RSS}/(n-k)} = \sqrt{(e^Te)/(n-k)}\) is called the standard error. It is really just the standard deviation of the error term, accounting correctly for the degrees of freedom.

Example: Assume we have a model for predicting batch yield in kilograms from \(\mathrm{x}\) = raw material purity, what does a standard error of 3.4 kg imply?

Answer: Recall if the assumption of normally distributed errors is correct, then this value of 3.4 kg indicates that about two thirds of the yield predictions will lie within \(\pm 3.4\) kg, and that 95% of the yield predictions will lie within \(\pm 2 \times 3.4\) kg. We will quantify the prediction interval more precisely, but the standard error is a good approximation for the error of \(\mathrm{y}\).

4.7.1.2. Exercise¶

For two extreme cases:

\(y_i = e_i\), i.e. where \(b_0 = 0\) and \(b_1 = 0\). In other words, our \(y_i\) measurements are just random noise.

\(y_i = b_0 + b_1 x_i + e_i\), for any values of \(b_0\) and \(b_1\), that model fits the data perfectly, with no residuals.

Do the following in the space below:

draw a generic plot

create an ANOVA table with fake values

write down the value of the ratio \(\dfrac{\text{RegSS}}{\text{TSS}}\)

interpret what this ratio means: \(F_0 = \dfrac{\text{mean square of regression}}{\text{mean square of residuals}}\)

From this exercise we learn that:

The null model (\(y_i = e_i\)) has ratio \(\dfrac{\text{RegSS}}{\text{TSS}} = 0\).

Models where the fit is perfect have a ratio \(\dfrac{\text{RegSS}}{\text{TSS}} = 1\). This number is called \(R^2\), and we will see why it is called that next.

4.7.1.3. Derivation of \(R^2\)¶

As introduced by example in the previous part, \(R^2 = \dfrac{\text{RegSS}}{\text{TSS}} = \dfrac{\sum_i{ \left(\hat{y}_i - \overline{\mathrm{y}}\right)^2}}{\sum_i{ \left(y_i - \overline{\mathrm{y}}\right)^2}}\): simply the ratio between the variance we can explain with the model (RegSS) and the total variance we started off with (TSS). We can also write that \(R^2 = 1-\dfrac{\text{RSS}}{\text{TSS}}\), based on the fact that TSS = RegSS + RSS.

From the above ratios it is straightforward to see that if \(R^2 = 0\), it requires that \(\hat{y}_i = \overline{\mathrm{y}}\): we are predicting just a flat line, the mean of the \(\mathrm{y}\) data. On the other extreme, an \(R^2 = 1\) implies that \(\hat{y}_i = y_i\), we have perfect predictions for every data point.

The nomenclature \(R^2\) comes from the fact that it is the square of the correlation between \(\mathrm{x}\) and \(\mathrm{y}\). Recall from the correlation section that

and can range in value from \(-1\) to \(+1\). The \(R^2\) ranges from 0 to +1, and is the square of \(r(x,y)\). \(R^2\) is just a way to tell how far we are between predicting a flat line (no variation) and the extreme of being able to predict the model building data, \(y_i\), exactly.

The \(R^2\) value is likely well known to anyone that has encountered least squares before. This number must be interpreted with caution. It is most widely abused as a way to measure “how good is my model”.

These two common examples illustrate the abuse. You likely have said or heard something like this before:

“the \(R^2\) value is really high, 90%, so this is a good model”.

“Wow, that’s a really low \(R^2\), this model can’t be right - it’s no good”.

How good, or how suitable a model is for a particular purpose is almost never related to the \(R^2\) value. The goodness of a model is better assessed by:

your engineering judgment: does the interpretation of model parameters make sense?

use testing data to verify the model’s predictive performance,

using cross-validation tools (we will see this topic later on) to see how well the model performs on new, unseen and unused testing data.

We will see later on that \(R^2\) can be arbitrarily increased by adding terms to the linear model, as we will see in the section on multiple linear regression (MLR). So sometimes you will see the adjusted \(R^2\) used to account for the \(k\) terms used in the model:

where \(k=2\) for the case of estimating a model \(y_i = b_0 + b_1 x_i\), as there are 2 parameters.

4.7.2. Confidence intervals for the model coefficients \(b_0\) and \(b_1\)¶

Note

A good reference for this section is the book by Fox (Chapter 6), and the book by Draper and Smith.

Up to this point we have made no assumptions about the data. In fact we can calculate the model estimates, \(b_0\) and \(b_1\) as well as predictions from the model without any assumptions on the data. It is only when we need additional information such as confidence intervals for the coefficients and prediction error estimates that we must make assumptions.

Recall the \(b_1\) coefficient represents the average effect on \(\mathrm{y}\) when changing the \(\mathrm{x}\)-variable by 1 unit. Let’s say you are estimating a reaction rate (kinetics) from a linear least squares model, a standard step in reactor design, you would want a measure of confidence of your coefficient. For example, if you calculate the reaction rate as \(k = b_1 = 0.81 \,\text{s}^{-1}\) you would benefit from knowing whether the 95% confidence interval was \(k = 0.81 \pm 0.26 \,\text{s}^{-1}\) or \(k = 0.81 \pm 0.68 \,\text{s}^{-1}\). In the latter case it is doubtful whether the reaction rate is of practical significance. Point estimates of the least squares model parameters are satisfactory, but the confidence interval information is richer to interpret.

We first take a look at some assumptions in least squares modelling, then return to deriving the confidence interval.

4.7.2.1. Assumptions required for analysis of the least squares model¶

Recall that the population (true) model is \(y_i = \beta_0 + \beta_1 x_i + \epsilon_i\) and \(b_0\) and \(b_1\) are our estimates of the model’s coefficients, and \(\mathrm{e}\) be the estimate of the true error \(\epsilon\). Note we are assuming imperfect knowledge of the \(y_i\) by lumping all errors into \(e_i\). For example, measurement error, structural error (we are not sure the process follows a linear structure), inherent randomness, and so on.

Furthermore, our derivation for the confidence intervals of \(b_0\) and \(b_1\) requires that we assume:

Linearity of the model, and that the values of \(\mathrm{x}\) are fixed (have no error). This implies that the error captured by \(\epsilon\) is the error of \(\mathrm{y}\), since the \(\beta_0 + \beta_1 \mathrm{x}\) terms are fixed.

In an engineering situation this would mean that your \(\mathrm{x}\) variable has much less uncertainty than the \(\mathrm{y}\) variable; and is often true in many situations.

The variance of \(\mathrm{y}\) is the same (constant) at all values of \(\mathrm{x}\), known as the constant error variance assumption.

The variability of \(\mathrm{y}\) can be non-constant in several practical cases (e.g. our measurement accuracy deteriorates at extreme high and low levels of \(\mathrm{x}\)).

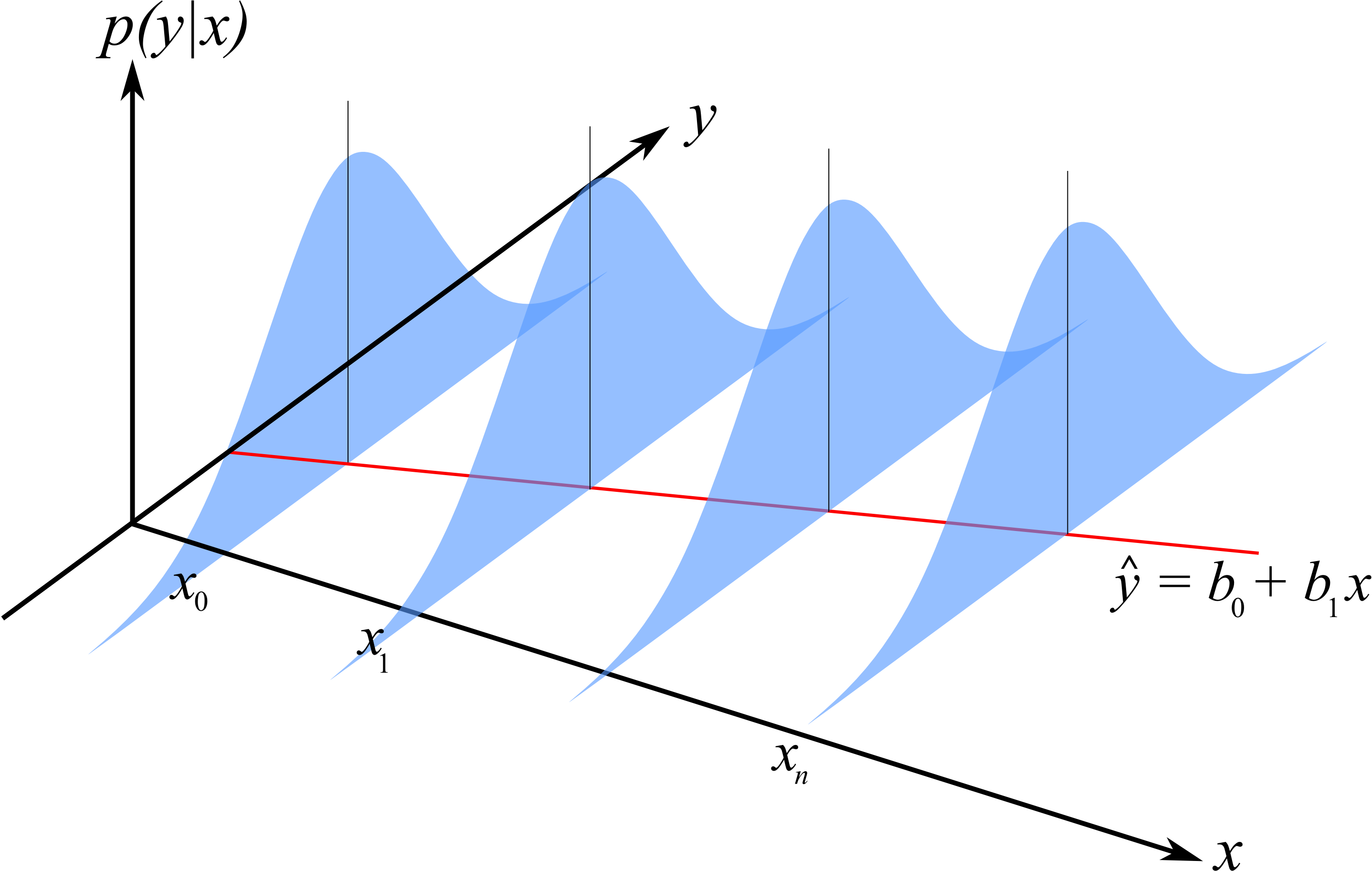

Illustration of the constant error variance assumption and the normally distributed error assumption.

The errors are normally distributed: \(e_i \sim \mathcal{N}(0, \sigma_\epsilon^2)\). This also implies that \(y_i \sim \mathcal{N}(\beta_0 + \beta_1x_i, \sigma_\epsilon^2)\) from the first linearity assumption.

Each error is independent of the other. This assumption is often violated in cases where the observations are taken in time order on slow moving processes (e.g. if you have a positive error now, your next sample is also likely to have a positive error). We will have more to say about this later when we check for independence with an autocorrelation test.

In addition to the fact that the \(\mathrm{x}\) values are fixed, we also assume they are independent of the error. If the \(\mathrm{x}\) value is fixed (i.e. measured without error), then it is already independent of the error.

When the \(\mathrm{x}\) values are not fixed, there are cases where the error gets larger as \(\mathrm{x}\) gets smaller/larger.

All \(y_i\) values are independent of each other. This again is violated in cases where the data are collected in time order and the \(y_i\) values are autocorrelated.

Note

Derivation of the model’s coefficients do not require these assumptions, only the derivation of the coefficient’s confidence intervals require this.

Also, if we want to interpret the model’s \(S_E\) as the estimated standard deviation of the residuals, then it helps if the residuals are normally distributed.

4.7.2.2. Confidence intervals for \(\beta_0\) and \(\beta_1\)¶

Recall from our discussions on confidence intervals that we need to know the mean and variance of the population from which \(b_0\) and \(b_1\) come. Specifically for the least squares case:

Once we know those parameters, we can create a \(z\)-value for \(b_0\) and \(b_1\), and then calculate the confidence interval for \(\beta_0\) and \(\beta_1\). So our quest now is to calculate \(\mathcal{V}\{\beta_0\}\) and \(\mathcal{V}\{\beta_1\}\), and we will use the 6 assumptions we made in the previous part.

Start from the equations that define \(b_0\) and \(b_1\) in the prior section where we showed that:

That last form of expressing \(b_1\) shows that every data point contributes a small amount to the coefficient \(b_1\). But notice how it is broken into 2 pieces: each term in the sum has a component due to \(m_i\) and one due to \(y_i\). The \(m_i\) term is a function of the x-data only, and since we assume the x’s are measured without error, that term has no error. The \(y_i\) component is the only part that has error.

So we can write:

where \(j\) is an index for all data points used to build the least squares model.

Questions:

So now apart from the numerator term, how could you decrease the error in your model’s \(b_1\) coefficient?

Use samples that are far from the mean of the \(\mathrm{x}\)-data.

Use more samples.

What do we use for the numerator term \(\mathcal{V}\{y_i\}\)?

This term represents the variance of the \(y_i\) values at a given point \(x_i\). If (a) there is no evidence of lack-of-fit, and (b) if \(\mathrm{y}\) has the same error at all levels of \(\mathrm{x}\), then we can write that \(\mathcal{V}\{y_i\}\) = \(\mathcal{V}\{e_i\} = \dfrac{\sum{e_i^2}}{n-k}\), where \(n\) is the number of data points used, and \(k\) is the number of coefficients estimated (2 in this case). The \(n-k\) quantity is the degrees of freedom.

Now for the variance of \(b_0 = \overline{\mathrm{y}} - b_1 \overline{\mathrm{x}}\). The only terms with error are \(b_1\), and \(\overline{\mathrm{y}}\). So we can derive that:

Summary of important equations

For convenience we will define some short-hand notation, which is common in least squares:

You will see that \(S_E\) is an estimate of the standard deviation of the error (residuals), while \(S_E(b_0)\) and \(S_E(b_1)\) are the standard deviations of estimates for \(b_0\) and \(b_1\) respectively.

Now it is straight forward to construct confidence intervals for the least squares model parameters. You will also realize that we have to use the \(t\)-distribution, because we are using an estimate of the variance.

Example

Returning back to our ongoing example, we can calculate the confidence interval for \(\beta_0\) and \(\beta_1\). We calculated earlier already that \(b_0\) = 3.0 and \(b_1\) = 0.5. Using these values we can calculate the standard error:

Use that \(S_E\) value to calculate the confidence intervals for \(\beta_0\) and \(\beta_1\), and use that \(c_t = 2.26\) at the 95% confidence level. You can calculate this value in R using qt(0.975, df=(N-2)). There are \(n-2\) degrees of freedom, the number of degrees of freedom used to calculate \(S_E\).

First calculate the \(S_E\) value and the standard errors for the \(b_0\) and \(b_1\). Substitute these into the equation for the confidence interval and calculate:

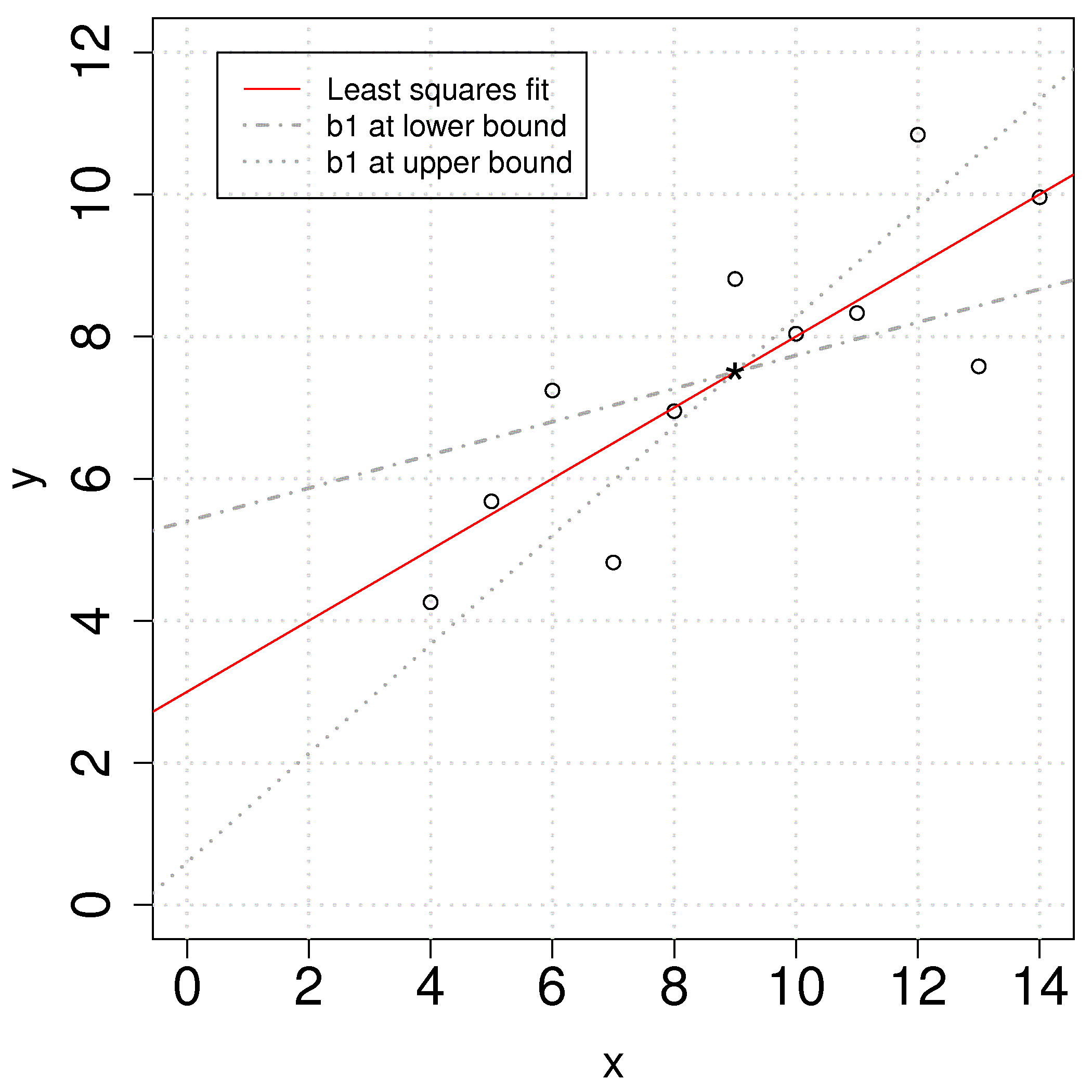

The 95% confidence interval for \(\beta_0\):

The confidence interval for \(\beta_1\):

The plot shows the effect of varying the slope parameter, \(b_1\), from its lower bound to its upper bound. Notice that the slope always passes through the mean of the data \((\overline{x}, \overline{y})\).

In many cases the confidence interval for the intercept is not of any value because the data for \(\mathrm{x}\) is so far away from zero, or the true value of the intercept is not of concern for us.

4.7.3. Prediction error estimates for the y-variable¶

Apart from understanding the error in the model’s coefficient, we also would like an estimate of the error when predicting \(\hat{y}_i\) from the model, \(y_i = b_0 + b_1 x_i + e_i\) for a new value of \(x_i\). This is known as the prediction interval, or prediction error interval.

4.7.3.1. A naive first attempt¶

We might expect the error is related to the average size of the residuals. After all, our assumptions we made earlier showed the standard error of the residuals was the standard error of the \(\mathrm{y}\): \(S_E^2 = \mathcal{V}\left\{e_i\right\} = \mathcal{V}\left\{y_i\right\} = \dfrac{\sum{e_i^2}}{n-k}\).

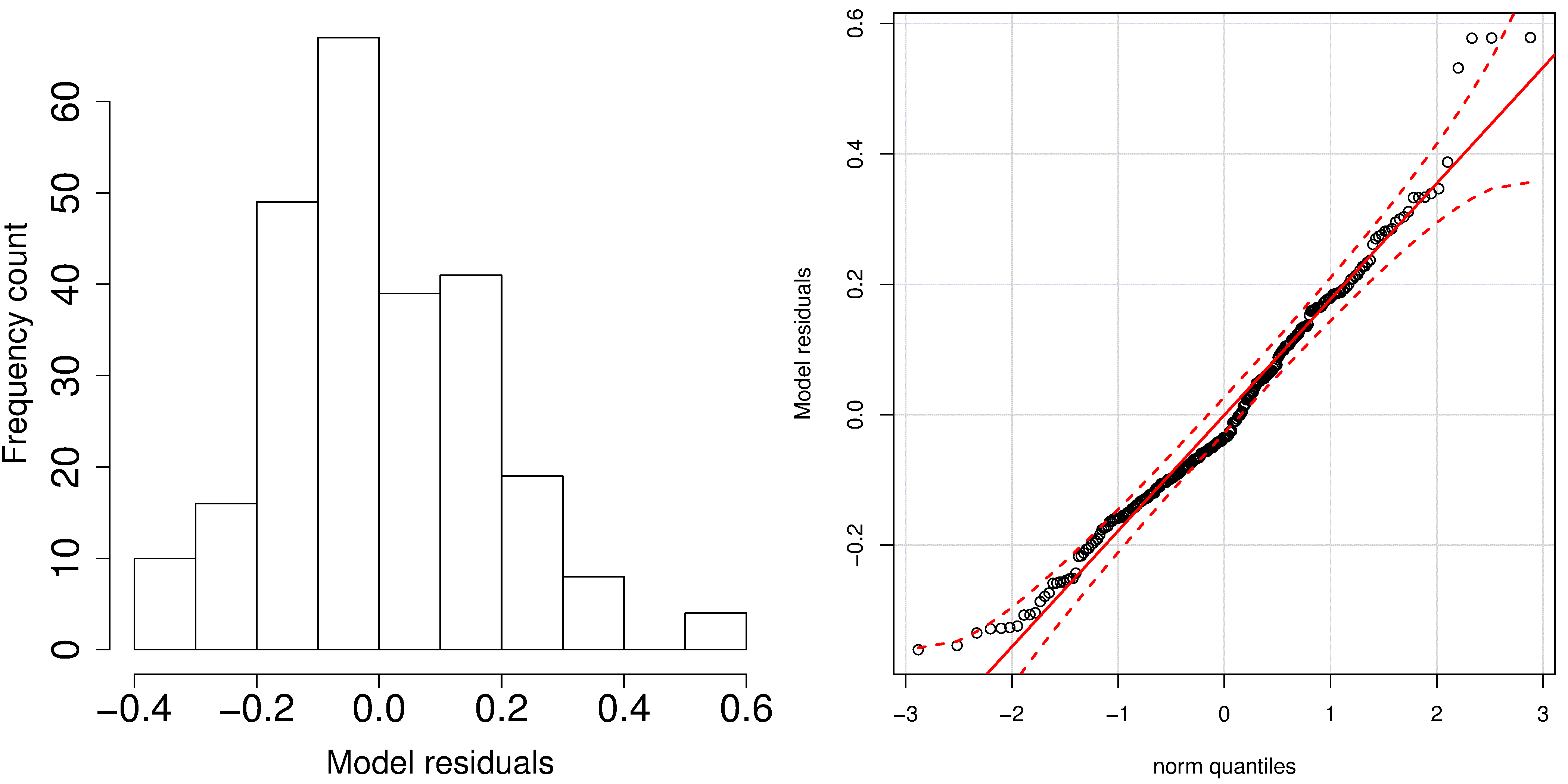

A typical histogram of the residuals looks as shown here: it is always centered around zero, and appears to be normally distributed. So we could expect to write our prediction error as \(\hat{y}_\text{new} = \left(b_0 + b_1 x_\text{new}\right) \pm c \cdot S_E\), where \(c\) is the number of standard deviations around the average residual, for example we could have set \(c=2\), approximating the 95% confidence limit.

But there is something wrong with that error estimate. It says that our prediction error is constant at any value of \(x_i\), even at values far outside the range where we built the model. This is a naive estimate of the prediction error. We have forgotten that coefficients \(b_0\) and \(b_1\) have error, and that error must be propagated into \(\hat{y}_\text{new}\).

This estimate is however a reasonable guess for the prediction interval when you only know the model’s \(S_E\) and don’t have access to a calculator or computer to calculate the proper prediction interval, shown next.

4.7.3.2. A better attempt to construct prediction intervals for the least squares model¶

Note

A good reference for this section is Draper and Smith, Applied Regression Analysis, page 79.

The derivation for the prediction interval is similar to that for \(b_1\). We require an estimate for the variance of the predicted \(\mathrm{y}\) at at given value of \(\mathrm{x}\). Let’s fix our \(\mathrm{x}\) value at \(x_*\) and since \(b_0 = \overline{\mathrm{y}} - b_1 \overline{\mathrm{x}}\), we can write the prediction at this fixed \(\mathrm{x}\) value as \(\hat{y}_* = \overline{\mathrm{y}} - b_1(x_* - \overline{\mathrm{x}})\).

You may read the reference texts for the interesting derivation of this variance. However, this is only the variance of the average predicted value of \(\mathrm{y}\). In other words, it is the variance we expect if we repeatedly brought in observations at \(x_*\). The prediction error of an individual observation, \(x_i\), and its corresponding prediction, \(\hat{y}_i\), is inflated slightly further:

\(\mathcal{V}\{\hat{y}_i\} = S_E^2\left(1 + \dfrac{1}{n} + \dfrac{(x_i - \overline{\mathrm{x}})^2}{\sum_j{\left( x_j - \overline{\mathrm{x}} \right)^2}}\right)\), where \(j\) is the index for all points used to build the least squares model.

We may construct a prediction interval in the standard manner, assuming that \(\hat{y}_i \sim \mathcal{N}\left( \overline{\hat{y}_i}, \mathcal{V}\{\hat{y}_i\} \right)\). We will use an estimate of this variance since we do not know the population variance. This requires we use the \(t\)-distribution with \(n-k\) degrees of freedom, at a given degree of confidence, e.g. 95%.

This is a prediction interval for a new prediction, \(\hat{y}_i\) given a new \(\mathrm{x}\) value, \(x_i\). For example, if \(\hat{y}_i\) = 20 at a given value of \(x_i\), and if \(c_t \sqrt{V\{\hat{y}_i\}}\) = 5, then you will usually see written in reports and documents that, the prediction was \(20 \pm 5\). A more correct way of expressing this concept is to say the true prediction at the value of \(x_i\) lies within a bound from 15 to 25, with 95% confidence.

4.7.3.3. Implications of the prediction error of a new \(\mathrm{y}\)¶

Let’s understand the interpretation of \(\mathcal{V}\{\hat{y}_i\} = S_E^2 \left(1 + \dfrac{1}{n} + \dfrac{(x_i - \overline{\mathrm{x}})^2}{\sum_j{\left( x_j - \overline{\mathrm{x}} \right)^2}}\right)\) as the variance of the predicted \(\hat{y}_i\) at the given value of \(x_i\). Using the previous example where we calculated the least squares line, now:

Now let’s say our \(x_\text{new}\) happens to be \(\overline{\mathrm{x}}\), the center point of our data. Write down the upper and lower value of the prediction bounds for the corresponding \(\hat{y}\), given that \(c_t = 2.26\) at the 95% confidence level.

The LB = \(\hat{y}_i - c_t \sqrt{V\{\hat{y}_i\}} = 7.5 - 2.26 \times \sqrt{(1.237)^2 \left(1+\dfrac{1}{11} + \dfrac{(x_i - \overline{\mathrm{x}})^2}{\sum_j{\left( x_j - \overline{\mathrm{x}} \right)^2}}\right)} = 7.5 - 2.26 \times 1.29 = 7.50 - 2.917 = 4.58\)

The UB = \(\hat{y}_i + c_t \sqrt{V\{\hat{y}_i\}} = 7.5 + 2.26 \times \sqrt{(1.237)^2 \left(1+\dfrac{1}{11} + \dfrac{(x_i - \overline{\mathrm{x}})^2}{\sum_j{\left( x_j - \overline{\mathrm{x}} \right)^2}}\right)} = 7.5 + 2.26 \times 1.29 = 7.50 + 2.917 = 10.4\)

Now move left and right, away from \(\overline{\mathrm{x}}\), and mark the confidence intervals. What general shape do they have?

The confidence intervals have a quadratic shape due to the square term under the square root. The smallest prediction error will always occur at the center of the model, and expands progressively wider as one moves away from the model center. This is illustrated in the figure and makes intuitive sense as well.

4.7.4. Interpretation of software output¶

To complete this section we show how to interpret the output from computer software packages. Most packages have very standardized output, and you should make sure that whatever package you use, that you can interpret the estimates of the parameters, their confidence intervals and get a feeling for the model’s performance.

The following output is obtained in R for the example we have been using in this section. The Python version follows below.

and produces this output:

Call:

lm(formula = y ~ x)

Residuals:

Min 1Q Median 3Q Max

-1.92127 -0.45577 -0.04136 0.70941 1.83882

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 3.0001 1.1247 2.667 0.02573 *

x 0.5001 0.1179 4.241 0.00217 **

---

Signif. codes: 0 `***' 0.001 `**' 0.01 `*' 0.05 `.' 0.1 ` ' 1

Residual standard error: 1.237 on 9 degrees of freedom

Multiple R-squared: 0.6665, Adjusted R-squared: 0.6295

F-statistic: 17.99 on 1 and 9 DF, p-value: 0.002170

Make sure you can calculate the following values using the equations developed so far, based on the above software output:

The intercept term \(b_0\) = 3.0001.

The slope term \(b_1\) = 0.5001.

The standard error of the model, \(S_E\) = 1.237, using \(n-k = 11 - 2 = 9\) degrees of freedom.

Using the standard error, calculate the standard error for the intercept = \(S_E(b_0) = 1.1247\).

Using the standard error, calculate the standard error for the slope = \(S_E(b_1) = 0.1179\).

The \(z\)-value for the \(b_0\) term is 2.667 (R calls this the

t valuein the printout, but in our notes we have called this \(z = \dfrac{b_0 - \beta_0}{S_E(b_0)}\); the value that we compare to the \(t\)-statistic and used to create the confidence interval).The \(z\)-value for the \(b_1\) term is 4.241 (see the above comment again).

The two probability values,

Pr(>|t|), for \(b_0\) and \(b_1\) should be familiar to you; they are the probability with which we expect to find a value of \(z\) greater than the calculated \(z\)-value (calledt valuein the output above). The smaller the number, the more confident we can be the confidence interval contains the parameter estimate.You can construct the confidence interval for \(b_0\) or \(b_1\) by using their reported standard errors and multiplying by the corresponding \(t\)-value. For example, if you want 99% confidence limits, then look up the 99% values for the \(t\)-distribution using \(n-k\) degrees of freedom, in this case it would be

qt((1-0.99)/2, df=9), which is \(\pm 3.25\). So the 99% confidence limits for the slope coefficient would be \([0.5 - 3.25 \times 0.1179; 0.5 + 3.25 \times 0.1179] = [0.12; 0.88]\).The \(R^2 = 0.6665\) value.

Be able to calculate the residuals: \(e_i = y_i - \hat{y}_i = y_i - b_0 - b_1 x_i\). We expect the median of the residuals to be around 0, and the rest of the summary of the residuals gives a feeling for how far the residuals range about zero.

Using Python, you can run the following code:

which produces the following output:

OLS Regression Results

==============================================================================

Dep. Variable: y R-squared: 0.667

Model: OLS Adj. R-squared: 0.629

Method: Least Squares F-statistic: 17.99

Date: Tue, 01 Jan 2019 Prob (F-statistic): 0.00217

Time: 00:00:00 Log-Likelihood: -16.841

No. Observations: 11 AIC: 37.68

Df Residuals: 9 BIC: 38.48

Df Model: 1

Covariance Type: nonrobust

==============================================================================

coef std err t P>|t| [0.025 0.975]

------------------------------------------------------------------------------

const 3.0001 1.125 2.667 0.026 0.456 5.544

x1 0.5001 0.118 4.241 0.002 0.233 0.767

==============================================================================

Omnibus: 0.082 Durbin-Watson: 3.212

Prob(Omnibus): 0.960 Jarque-Bera (JB): 0.289

Skew: -0.122 Prob(JB): 0.865

Kurtosis: 2.244 Cond. No. 29.1

==============================================================================

Standard error = 1.2366033227263207

As for the R code, we can see at a glance:

The intercept term \(b_0\) = 3.0001.

The slope term \(b_1\) = 0.5001.

The standard error of the model, \(S_E\) = 1.237, using \(n-k = 11 - 2 = 9\) degrees of freedom. The summary output table does not show the standard error, but you can get it from

np.sqrt(results.scale), whereresultsis the Python object from fitting the linear model.Using the standard error, calculate the standard error for the intercept = \(S_E(b_0) = 1.1247\), which is reported directly in the table.

Using the standard error, calculate the standard error for the slope = \(S_E(b_1) = 0.1179\), which is reported directly in the table.

The \(z\)-value for the \(b_0\) term is 2.667 (Python calls this the

t-value in the printout, but in our notes we have called this \(z = \dfrac{b_0 - \beta_0}{S_E(b_0)}\); the value that we compare to the \(t\)-statistic and used to create the confidence interval).The \(z\)-value for the \(b_1\) term is 4.241 (see the above comment again).

The two probability values,

P>|t|, for \(b_0\) and \(b_1\) should be familiar to you; they are the probability with which we expect to find a value of \(z\) greater than the calculated \(z\)-value (calledt valuein the output above). The smaller the number, the more confident we can be the confidence interval contains the parameter estimate.You can construct the confidence interval for \(b_0\) or \(b_1\) by using their reported standard errors and multiplying by the corresponding \(t\)-value. For example, if you want 99% confidence limits, then look up the 99% values for the \(t\)-distribution using \(n-k\) degrees of freedom, in this case it would be

from scipy.stats import t; t.ppf(1-(1-0.99)/2, df=9), which is \(\pm 3.25\). So the 99% confidence limits for the slope coefficient would be \([0.5 - 3.25 \times 0.1179; 0.5 + 3.25 \times 0.1179] = [0.117; 0.883]\). However, the table output gives you the 95% confidence interval. Under the column0.025and0.975(leaving 2.5% in the lower and upper tail respectively). For the slope coefficient, for example, this interval is [0.233; 0.767]. If you desire, for example, the 99% confidence interval, you can adjust the code:print(results.summary(alpha=1-0.99))The \(R^2 = 0.6665\) value.

Be able to calculate the residuals: \(e_i = y_i - \hat{y}_i = y_i - b_0 - b_1 x_i\).