4.10. More than one variable: multiple linear regression (MLR)¶

We now move to including more than one explanatory \(\mathrm{x}\) variable in the linear model. We will:

introduce some matrix notation for this section

show how the optimization problem is solved to estimate the model parameters

how to interpret the model coefficients

extend our tools from the previous section to analyze the MLR model

use integer (yes/no or on/off) variables in our model.

First some motivating examples:

A relationship exists between \(x_1\) = reactant concentration and \(x_2\) = temperature with respect to \(y\) = reaction rate. We already have a linear model between \(y = b_0 + b_1x_1\), but we want to improve our understanding of the system by learning about the temperature effect, \(x_2\).

We want to predict melt index in our reactor from the reactor temperature, but we know that the feed flow and pressure are also good explanatory variables for melt index. How do these additional variables improve the predictions?

We know that the quality of our plastic product is a function of the mixing time, and also the mixing tank in which the raw materials are blended. How do we incorporate the concept of a mixing tank indicator in our model?

4.10.1. Multiple linear regression: notation¶

To help the discussion below it is useful to omit the least squares model’s intercept term. We do this by first centering the data.

This indicates that if we fit a model where the \(\mathrm{x}\) and \(\mathrm{y}\) vectors are first mean-centered, i.e. let \(x = x_\text{original} - \text{mean}\left(x_\text{original} \right)\) and \(y = y_\text{original} - \text{mean}\left(y_\text{original} \right)\), then we still estimate the same slope for \(b_1\), but the intercept term is zero. All we gain from this is simplification of the subsequent analysis. Of course, if you need to know what \(b_0\) was, you can use the fact that \(b_0 = \overline{y} - b_1 \overline{x}\). Nothing else changes: the \(R^2, S_E, S_E(b_1)\) and all other model interpretations remain the same. You can easily prove this for yourself.

So in the rest of the this section we will omit the model’s intercept term, since it can always be recovered afterwards.

The general linear model is given by:

And writing the last equation \(n\) times over for each observation in the data:

where:

\(\mathbf{y}\): \(n \times 1\)

\(\mathbf{X}\): \(n \times k\)

\(\mathbf{b}\): \(n \times 1\)

\(\mathbf{e}\): \(n \times 1\)

4.10.2. Estimating the model parameters via optimization¶

As with the simple least squares model, \(y = b_0 + b_1 x\), we aim to minimize the sum of squares of the errors in vector \(\mathbf{e}\). This least squares objective function can be written compactly as:

\[\begin{split}\begin{array}{rl} f(\mathbf{b}) &= \mathbf{e}^T\mathbf{e} \\ &= \left(\mathbf{y} - \mathbf{X} \mathbf{b} \right)^T \left( \mathbf{y} - \mathbf{X} \mathbf{b} \right) \\ &= \mathbf{y}^T\mathbf{y} - 2 \mathbf{y}^T\mathbf{X}\mathbf{b} + \mathbf{b}\mathbf{X}^T\mathbf{X}\mathbf{b} \end{array}\end{split}\]

Taking partial derivatives with respect to the entries in \(\mathbf{b}\) and setting the result equal to a vector of zeros, you can prove to yourself that \(\mathbf{b} = \left( \mathbf{X}^T\mathbf{X} \right)^{-1}\mathbf{X}^T\mathbf{y}\). You might find the Matrix Cookbook useful in solving these equations and optimization problems.

Three important relationships are now noted:

\(\mathcal{E}\{\mathbf{b}\} = \mathbf{\beta}\)

\(\mathcal{V}\{\mathbf{b}\} = \left( \mathbf{X}^T\mathbf{X} \right)^{-1} S_E^2\)

An estimate of the standard error is given by: \(\sigma_e \approx S_E = \sqrt{\dfrac{\mathbf{e}^T\mathbf{e}}{n-k}}\), where \(k\) is the number of parameters estimated in the model and \(n\) is the number of observations.

These relationships imply that our estimates of the model parameters are unbiased (the first line), and that the variability of our parameters is related to the \(\mathbf{X}^T\mathbf{X}\) matrix and the model’s standard error, \(S_E\).

Going back to the single variable case we showed in the section where we derived confidence intervals for \(b_0\) and \(b_1\) that:

\[\mathcal{V}\{b_1\} = \dfrac{S_E^2}{\sum_j{\left( x_j - \overline{\mathrm{x}} \right)^2}}\]

Notice that our matrix definition, \(\mathcal{V}\{\mathbf{b}\} = \left( \mathbf{X}^T\mathbf{X} \right)^{-1} S_E^2\), gives exactly the same result, remembering the \(\mathrm{x}\) variables have already been centered in the matrix form. Also recall that the variability of these estimated parameters can be reduced by (a) taking more samples, thereby increasing the denominator size, and (b) by including observations further away from the center of the model.

Example

Let \(x_1 = [1, 3, 4, 7, 9, 9]\), and \(x_2 = [9, 9, 6, 3, 1, 2]\), and \(y = [3, 5, 6, 8, 7, 10]\). By inspection, the \(x_1\) and \(x_2\) variables are negatively correlated, and the \(x_1\) and \(y\) variables are positively correlated (also positive covariance). Refer to the definition of covariance in an equation from the prior section.

After mean centering the data we have that \(x_1 = [-4.5, -2.5, -1.5 , 1.5 , 3.5, 3.5]\), and \(x_2 = [4, 4, 1, -2, -4, -3]\) and \(y = [-3.5, -1.5, -0.5, 1.5, 0.5, 3.5]\). So in matrix form:

The \(\mathbf{X}^T\mathbf{X}\) and \(\mathbf{X}^T\mathbf{y}\) matrices can then be calculated as:

Notice what these matrices imply (remembering that the vectors in the matrices have been centered). The \(\mathbf{X}^T\mathbf{X}\) matrix is a scaled version of the covariance matrix of \(\mathbf{X}\). The diagonal terms show how strongly the variable is correlated with itself, which is the variance, and always a positive number. The off-diagonal terms are symmetrical, and represent the strength of the relationship between, in this case, \(x_1\) and \(x_2\). The off-diagonal terms for two uncorrelated variables would be a number close to, or equal to zero.

The inverse of the \(\mathbf{X}^T\mathbf{X}\) matrix is particularly important - it is related to the standard error for the model parameters - as in: \(\mathcal{V}\{\mathbf{b}\} = \left( \mathbf{X}^T\mathbf{X} \right)^{-1} S_E^2\).

The non-zero off-diagonal elements indicate that the variance of the \(b_1\) coefficient is related to the variance of the \(b_2\) coefficient as well. This result is true for most regression models, indicating we can’t accurately interpret each regression coefficient’s confidence interval on its own.

For the two variable case, \(y = b_1x_1 + b_2x_2\), the general relationship is that:

where \(r^2_{12}\) represents the correlation between variable \(x_1\) and \(x_2\). What happens as the correlation between the two variables increases?

4.10.3. Interpretation of the model coefficients¶

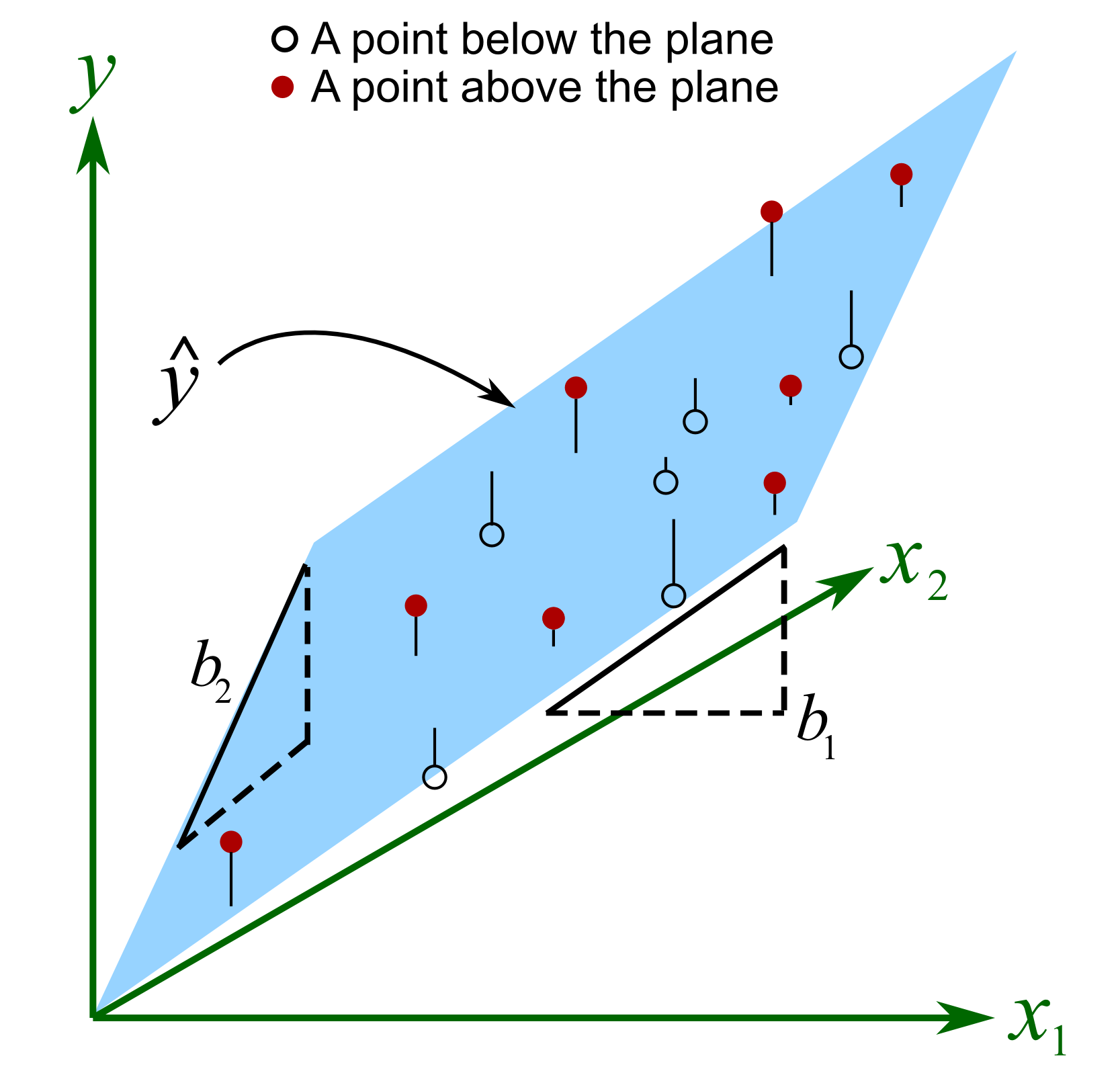

Let’s take a look at the case where \(y = b_1x_1 + b_2x_2\). We can plot this on a 3D plot, with axes of \(x_1\), \(x_2\) and \(y\):

The points are used to fit the plane by minimizing the sum of square distances shown by vertical lines from each point to the plane. The interpretation of the slope coefficients for \(b_1\) and \(b_2\) is not the same as for the case with just a single \(\mathrm{x}\) variable.

When we have multiple \(\mathrm{x}\) variables, then the value of coefficient \(b_1\) is the average change we would expect in \(\mathbf{y}\) for a one unit change in \({x}_1\) provided we hold \({x}_2\) fixed. It is the last part that is new: we must assume that other \(\mathrm{x}\) variables are fixed.

For example, let \(y = b_T T + b_S S = -0.52 T + 3.2 S\), where \(T\) is reactor temperature in Kelvin, and \(S\) is substrate concentration in g/L, and \(y\) is yield in \(\mu\text{g}\), for a bioreactor reactor system. The \(b_T = -0.52 \mu\text{g}/\text{K}\) coefficient is the decrease in yield for every 1 Kelvin increase in temperature, holding the substrate concentration fixed.

This is a good point to introduce some terminology you might come across. Imagine you have a model where \({y}\) is the used vehicle price and \({x}_1\) is the mileage on the odometer (we expect that \(b_1\) will be negative) and \({x}_2\) is the number of doors on the car. You might hear the phrase: “the effect of the number of doors, controlling for mileage, is not significant”. The part “controlling for …” indicates that the controlled variable has been added to regression model, and its effect is accounted for. In other words, for two vehicles with the same mileage, the coefficient \(b_2\) indicates whether the second hand price increases or decreases as the number of doors on the car changes (e.g. a 2-door vs a 4-door car).

In the prior example, we could say: the effect of substrate concentration on yield, controlling for temperature, is to increase the yield by 3.2 \(\mu\text{g}\) for every increase in 1 g/L of substrate concentration.

4.10.4. Integer (dummy, indicator) variables in the model¶

Now that we have introduced multiple linear regression to expand our models, we also consider these sort of cases:

We want to predict yield, but want to indicate whether a radial or axial impeller was used in the reactor and learn whether it has any effect on yield.

Is there an important difference when we add the catalyst first and then the reactants, or the reactants followed by the catalyst?

Use an indicator variable to show if the raw material came from the supplier in Spain, India, or Vietnam and interpret the effect of supplier on yield.

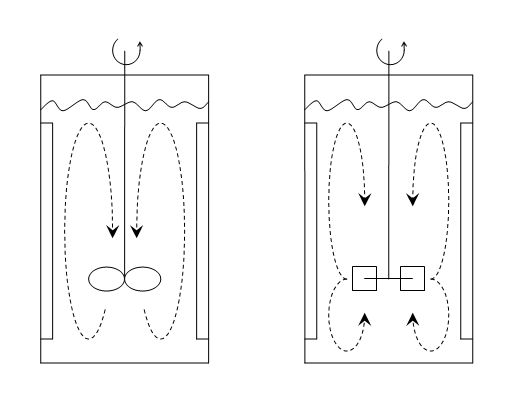

Axial and radial blades; figure from Wikipedia

We will start with the simplest case, using the example of the radial or axial impeller. We wish to understand the effect on yield, \(y [\mu\text{g}]\), as a function of the impeller type, and impeller speed, \(x\).

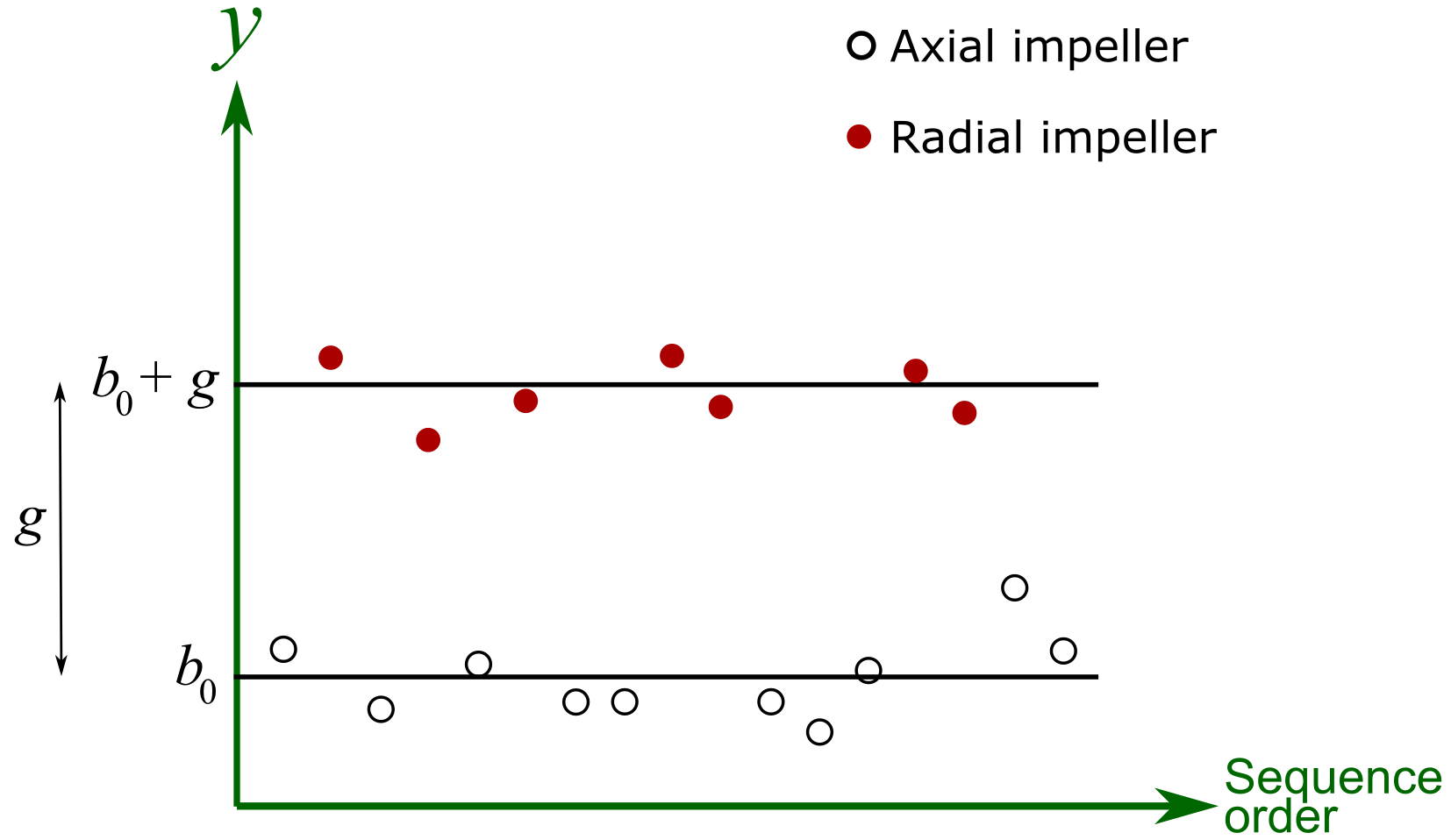

where \(d_i = 0\) if an axial impeller was used, or \(d_i = 1\) if a radial impeller was used. All other least squares assumptions hold, particularly that the variance of \(y_i\) is unrelated to the value of \(d_i\). For the initial discussion let’s assume that \(\beta_1 = 0\), then geometrically, what is happening here is:

The \(\gamma\) parameter, estimated by \(g\), is the difference in intercept when using a different impeller type. Note that the lines are parallel.

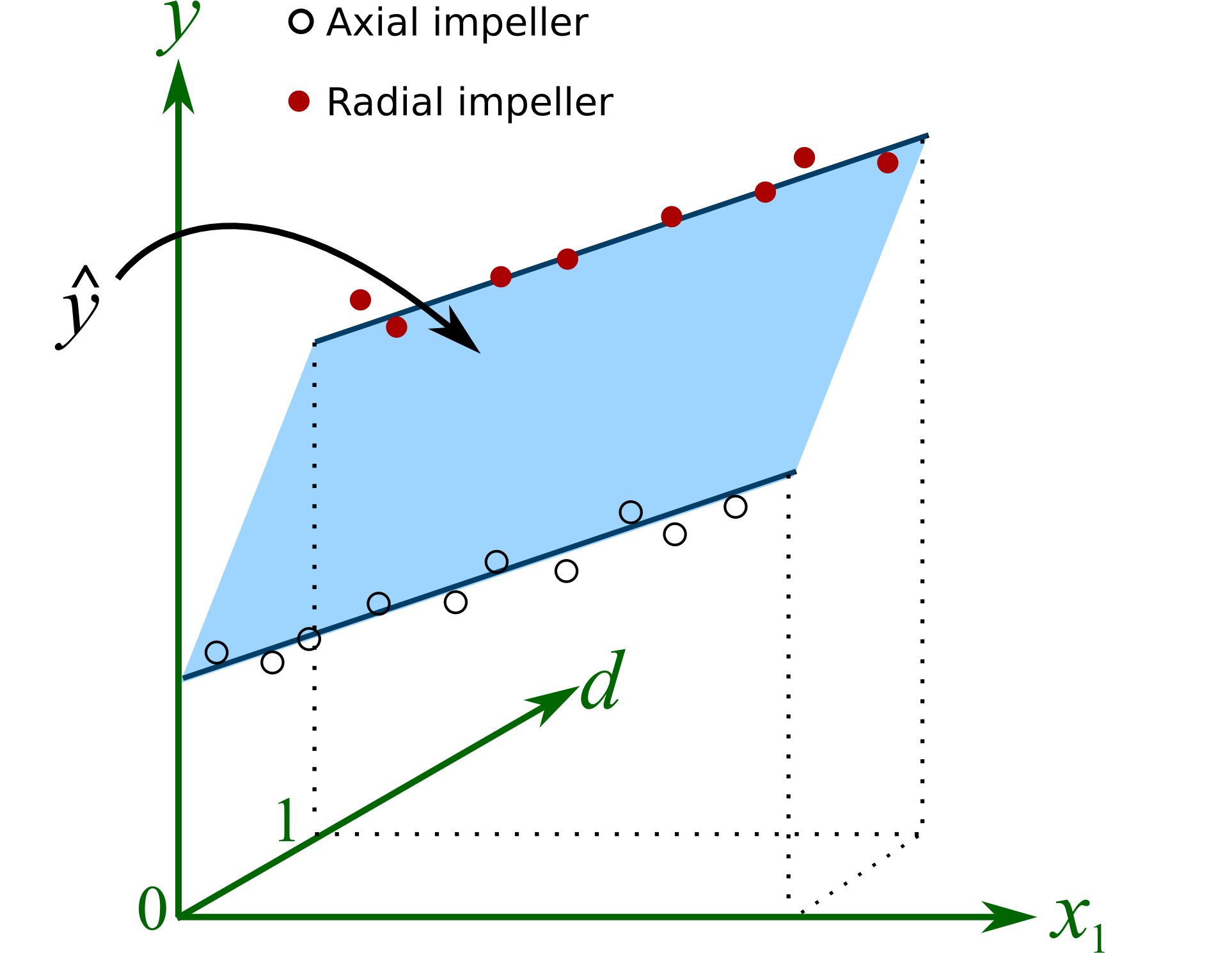

Now if \(\beta_1 \neq 0\), then the horizontal lines in the above figure are tilted, but still parallel to each other. Nothing else is new here, other than the representation of the variable used for \(d_i\). The interpretation of its coefficient, \(g\), is the same as with any other least squares coefficient. In this particular example, had \(g = -56 \mu\text{g}\), it would indicate that the average decrease in yield is 56 \(\mu\text{g}\) when using a radial impeller.

The rest of the analysis tools for least squares models can be used quite powerfully. For example, a 95% confidence interval for the impeller variable might have been:

which would indicate the impeller type has no significant effect on the yield amount, the \(y\)-variable.

Integer variables are also called dummy variables or indicator variables. Really what is happening here is the same concept as for multiple linear regression, the equation of a plane is being estimated. We only use the equation of the plane at integer values of \(d\), but mathematically the underlying plane is actually continuous.

We have to introduce additional terms into the model if we have integer variables with more than 2 levels. In general, if there are \(p\)-levels, then we must include \(p-1\) terms. For example, if we wish to test the effect of \(y\) = yield achieved from the raw material supplier in Spain, India, or Vietnam, we could code:

Spain: \(d_{i1} = 0\) and \(d_{i2} = 0\)

India: \(d_{i1} = 1\) and \(d_{i2} = 0\)

Vietnam: \(d_{i1} = 0\) and \(d_{i2} = 1\).

and solve for the least squares model: \(y = \beta_0 + \beta_1x_1 + \ldots + \beta_k x_k + \gamma_1 d_1 + \gamma_2 d_2 + \varepsilon\), where \(\gamma_1\) is the effect of the Indian supplier, holding all other terms constant (i.e. it is the incremental effect of India relative to Spain); \(\gamma_2\) is the incremental effect of the Vietnamese supplier relative to the base case of the Spanish supplier. Because of this somewhat confusing interpretation of the coefficients, sometimes people will assume they can sacrifice an extra degree of freedom, but introduce \(p\) new terms for the \(p\) levels of the integer variable, instead of \(p-1\) terms.

Spain: \(d_{i1} = 1\) and \(d_{i2} = 0\) and \(d_{i3} = 0\)

India: \(d_{i1} = 0\) and \(d_{i2} = 1\) and \(d_{i3} = 0\)

Vietnam: \(d_{i1} = 0\) and \(d_{i2} = 0\) and \(d_{i3} = 1\)

and \(y = \beta_0 + \beta_1x_1 + \ldots + \beta_k x_k + \gamma_1 d_1 + \gamma_2 d_2 + \gamma_3 d_3 + \varepsilon\), where the coefficients \(\gamma_1, \gamma_2\) and \(\gamma_3\) are assumed to be more easily interpreted. However, calculating this model will fail, because there is a built-in perfect linear combination. The \(\mathbf{X}^T\mathbf{X}\) matrix is not invertible.