4.3. Covariance¶

You probably have an intuitive sense for what it means when two things are correlated. We will get to correlation next, but we start by first looking at covariance. Let’s take a look at an example to formalize this, and to see how we can learn from data.

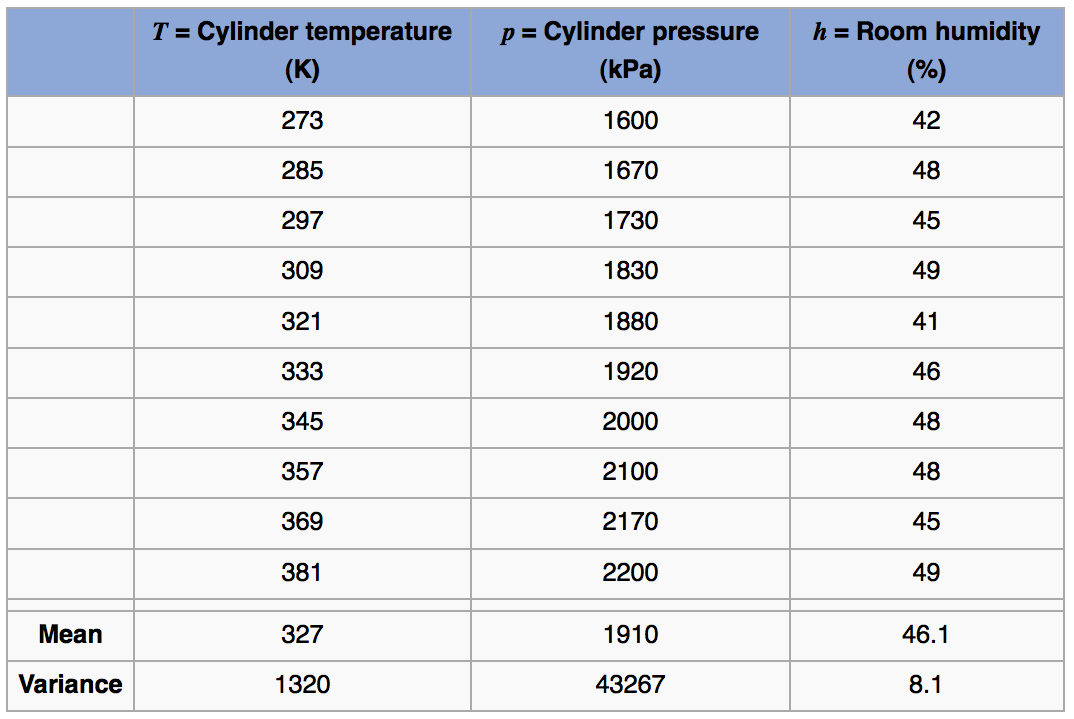

Consider the measurements from a gas cylinder; temperature (K) and pressure (kPa). We know the ideal gas law applies under moderate conditions:

Fixed volume,

= 20 L Moles of gas,

mols of chlorine gas, molar mass = 70.9 g/mol, so this is 1 kg of gas Gas constant,

J/(mol.K)

Given these numbers, we can simplify the ideal gas law to:

The formal definition for covariance between any two variables is: [terminology used here was defined in a previous section]

Use this to calculate the covariance between temperature and pressure by breaking the problem into steps:

First calculate deviation variables. They are called this because they are now the deviations from the mean:

and . Subtracting off the mean from each vector just centers their frame of reference to zero. Next multiply the two vectors, element-by-element, to calculate a new vector

. The expected value of this product can be estimated by using the average, or any other suitable measure of location. In this case

mean(product)in R gives 6780. This is the covariance value.More specifically, we should provide the units as well: the covariance between temperature and pressure is 6780 [K.kPa] in this example. Similarly the covariance between temperature and humidity is 202 [K.%].

In your own time calculate a rough numeric value and give the units of covariance for these cases:

= age of married partner 1

= age of married partner 2

= gas pressure

= gas volume at a fixed temperature

= mid term mark for this course

= final exam mark

= hours worked per week

= weekly take home pay

= cigarettes smoked per month

= age at death

= temperature on top tray of distillation column

= top product purity Also describe what an outlier observation would mean in these cases.

One last point is that the covariance of a variable with itself is the variance:

Using the cov(temp, pres) function in R gives 7533.333, while we calculated 6780. The difference comes from x is internally called as cov(x, x). Since R returns the unbiased variance, it divides through by

Note that deviation variables are not affected by a shift in the raw data of

4.4. Correlation¶

The variance and covariance values are units dependent. For example, you get a very different covariance when calculating it using grams vs kilograms. The correlation on the other hand removes the effect of scaling and arbitrary unit changes. It is defined as:

It takes the covariance value and divides through by the units of

So returning back to our example of the gas cylinder, the correlation between temperature and pressure, and temperature and humidity can be calculated now as:

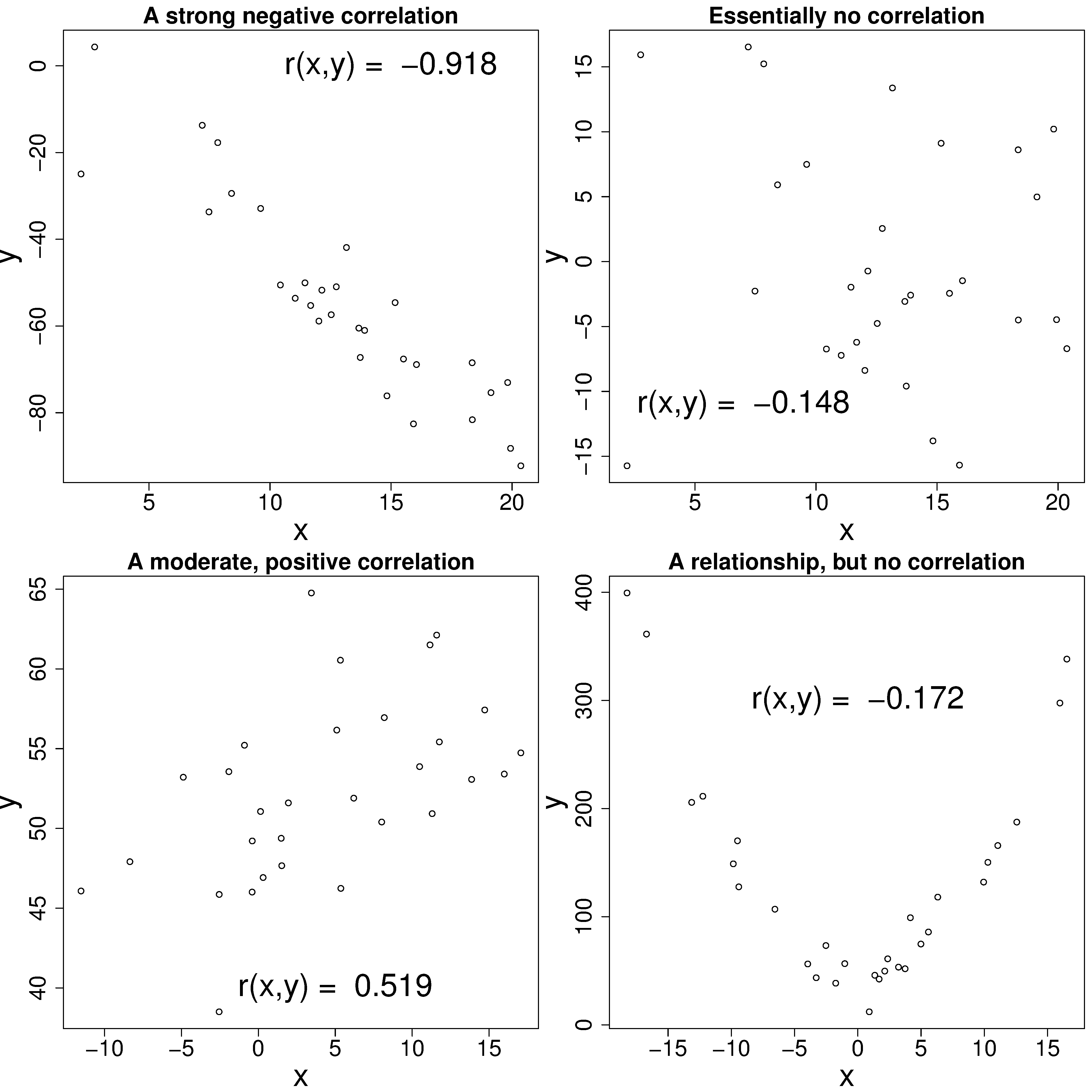

Note that correlation is the same whether we measure temperature in Celsius or Kelvin. Study the plots here to get a feeling for the correlation value and its interpretation:

4.5. Some definitions¶

Be sure that you can derive (and interpret!) these relationships, which are derived from the definition of the covariance and correlation:

which we take as the definition for covariance

, which is counter to what might be expected. Rather:

(3)¶